The history of science is littered with discarded theories, revisions, surprises, shocks, and sometimes even revolutions. In recent days, we’ve seen a couple of great examples of apparently seismic shifts in our understanding of some key issues in neuroscience. For one, the dramatic revelation of some image-processing monkey business has given the beta amyloid theory of Alzheimer’s disease a run for its money (1). For another, a recent publication from the lab of Anna Moncrieff has continued her assault on the serotonin hypothesis of depression and the mechanism of action of anti-depressants (2). The problem with these kinds of developments, even though they are entirely to be expected in the long run of science (3) is that they can sometimes have the effect of shaking popular confidence. I think that at the moment we are seeing lots of this kind of doubt of science—I call it the RWGRWB phenomenon (“one day they tell us Red Wine is Good for us and the next day it’s the opposite”). And there’s something weirdly bicameral happening it seems to me. There are some bits of science in which we have unshakeable faith even though they are not well-grounded (eg. Computers can understand who we are better than we understand ourselves) and others that are in heavy dispute even though they have enormous scientific support (eg. Vaccines work). I have ideas about why this is happening that I’ll save for another time.

Though these issues are enormously important and I think interesting, I want to go in a slightly different direction today and talk about the influence of the methods of science on our understanding of how things work. And I don’t mean the kind of hypothesis-experiment-result stuff that we all (hopefully) learned in school, but the actual kinds of kit that we use to do science. The machines that go ping. For example, very early on, so early in fact that there wasn’t really science as we understood it, there were ideas about stuff called pneuma. Pneuma were the substances and systems that made things go. Your breath was one kind of pneumon. Your blood was another. And your nerves were another. This was an idea that had its origin literally thousands of years ago, but hung on through at least the time of Rene Descartes in the 17th century. Descartes imagined entire systems of neuroscience constructed around the idea that the nervous system was an elaborate pneumatic pump. Descartes would likely have been inspired by Leonardo da Vinci’s gorgeous drawings of neuroanatomical structures, which focused on the brain’s ventricles—fluid-filled sacs that are found in the interior of your head, and which Leonardo and many others believed supported pneuma theory. It wasn’t until sometime later that Luigi Galvani’s inspired and slightly ghoulish experiments with frogs began the era of bio-electric theories of nervous function (4).

It’s taken me a little time to get to my point, but it’s this: it would have been incredibly difficult for Leonardo to have understood the real anatomic basis of brain function because he didn’t have the tools – microscopes, histological methods, and so on, to be able to see brain cells. But he could easily localize and even make three-dimensional casts of the ventricles by filling them with molten wax and peeling away the overlying tissue. In other words, this is the best case that I can think of in which the methods that are available to us have influenced how we think things work and not for the better. I’ll leave it as an exercise for you to try to think of other examples of this (5). The important thing is that a methodology (in this case, the making of wax impressions) can act like a runaway train that pulls an entire field of science in a particular direction.

Image of eye tracking study by Alfred Yarbus

And now to the eyes. Beginning with some fascinating work by Alfred Yarbus, a Russian psychologist working in the 1950s and 60s, people in my field have had a minor obsession with recording movements of the eyes. Yarbus invented a method for accurate eye movement tracking in which he attached a small suction cup to the eyeball (ouch…) to which was attached a tiny mirror. As the eyes moved, a light that was shone on the mirror bounced around on a screen and with the appropriate arithmetic Yarbus was able to convert the bouncing light beam to data about the movements of the eyes. His heroic labours resulted in some of the first really interesting findings about eye movements, such as that the way that we scan an image depends very much on what we are told about it and what we are trying to find out. These days, methods of eye tracking have become much more accurate and flexible. We can measure them with great precision and can even do a pretty good job of tracking eye movements of people walking around in the real world. You’ve probably seen loads of images based on studies of people looking at all kinds of things – faces, items in a grocery store, the fronts of buildings, web pages, and so on. Scientists, marketeers, raconteurs have all made hay over this amazing technology. But here’s the thing: as fascinating as it all is, eye tracking places very heavy emphasis on one kind of vision and, honestly, probably not the most important kind of vision for much of everyday life. Eye tracking technology may be the 21st century equivalent of Leonardo’s wax impressions of the ventricles. To understand why, we have to take a short detour into visual neuroscience. I will try to make it painless. Possibly even a bit interesting!

From eye to brain

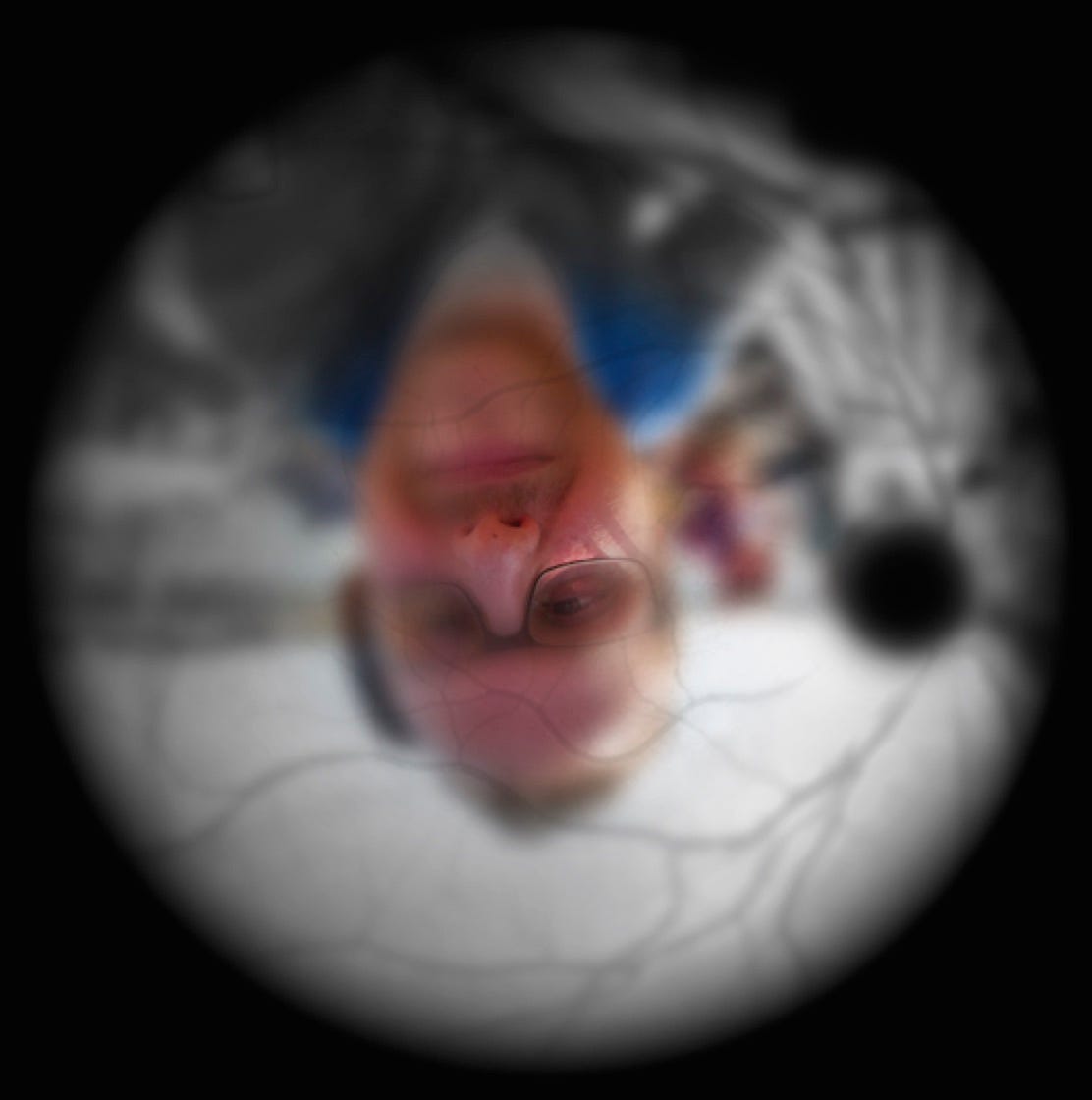

Most people have heard of a thing in the eye called the fovea. It is an intensely specialized region of the retina (the sheet of cells in the back of the eyeball that respond to light, hence the beginning of vision). What’s less well-appreciated is the fact that the fovea is very small (about 2 mm in diameter) and that it’s looking at a very small part of the visual world at any given moment in time. For perspective on this, hold your thumb out at arm’s length. Look at it. Your thumb fills the fovea. Anything beyond your thumb falls outside of what we call foveal vision. This turns out to be really important because once we begin to stray from the fovea, the nature of what we can perceive changes markedly. Colour can’t be seen outside of the fovea. Detailed vision (or in the lingo of the vision scientist, visual acuity) drops off rapidly. The beautiful image below gives you a sense for how this all works. Fovea = colour and detail. Outside fovea (peripheral vision, as it’s called) =blobby and blurry.(6)

Ben Bogart (Own work) [CC BY-SA 3.0 (https://creativecommons.org/licenses/by-sa/3.0)], via Wikimedia Commons

This distinction between foveal vision and peripheral vision turns out to be incredibly important, as you can imagine. In fact, entire books have been written about it and I don’t think it is an exaggeration to say that the organization of the retina into centre and periphery is one of the most important things to know about vision and the brain. Much of what happens upstream in our visual system, in the hoary reaches of the neocortex that feature so prominently in those seductive multi-coloured images of brain imaging that we like to look at, is predicated on this early division of labour in the retina.

But now, so that I don’t end up writing my own textbook here, let’s leave the history of visual neuroscience and return to my main point. It has to do with eye movements. Thinking about what I’ve written above, it’s important to recognize that probably 95% of research using eye movement tracking of whatever provenance, from marketing research to hard-core cognitive neuroscience, focuses on what’s happening at the fovea. That’s what we are really tracking. And that’s not a completely wrong idea. For all kinds of purposes, foveal vision is key. We can’t read without it. We can’t understand the fine details of objects, nor understand very much about their colours. But the problem with this technology is that it leaves out a big part of the story. It makes us feel as though foveal vision is all that matters when we engage with a scene.

Feeling at the edges

Luckily, there are some fine researchers looking at the role of peripheral vision in human behaviour. Though I wouldn’t by any means call myself a leading expert in this area, we’ve now done quite a few studies of the peripheral visual system (7). We are especially interested in understanding how what happens at the edge of vision influences how we relate to built environments of all kinds. And our early findings, along with those of others, suggests a couple of really interesting things. First of all, the peripheral visual system is extraordinarily good at picking up information very quickly. We can flash an image at a person’s eyes for about 50 milliseconds – that’s a twentieth of a second, considerably less than the time it takes to blink your eyes—and though they may not know what they’ve seen, they can tell us how much they liked it and they can make sensible discriminations between, say, images of nature and images of buildings. Other research that we’ve done, has led us to think that whatever is going on in peripheral vision may contribute to our sense of atmosphere. Though atmosphere is notoriously hard to define clearly and scientifically, you know what I mean when I suggest that any setting in which you find yourself has an atmosphere, and whatever that ephemeral thing is, you know that it can change the way that you feel about a place and what you do when you are there.

The influence of the visual periphery is abundantly obvious in everyday life. When I have the claustrophobic feeling of being surrounded by skyscrapers, a part of that feeling comes from what’s happening in the upper part of space and not where my fovea is bouncing around, taking in details. When I’m awe-struck as I walk into a magnificent cathedral, it’s the visual periphery that contributes to those sublime feelings. But the periphery is less often the focus of research in this area because it’s a little bit subtle to even think about how to measure its contributions. In our lab, we’ve used clever methods pioneered by other people in this field to restrict vision to the peripheral visual field so as to highlight its contribution to behaviour. Using conventional methods of inference from eye-tracking studies can no more get at this stuff than Leonardo’s waxen ventricles could have told us the story of how brains work. Equating architectural experience with what happens at the fovea leaves out massively important parts of the story. I think this has happened to our field in part because of methodological expediency but also because of a failure to recognize the real mechanics of biological vision.

To finish off, I’ll just say that there were a couple of reasons why I wanted to talk about the edge of vision today. One is that over the past few posts I’ve been pretty hard on efforts to neurologize architecture. To some extent, that’s warranted. There are neurobollocks in the field. I see them every day. But at the same time, I want to make it clear that there are cases and will probably be more cases in the future where an understanding of brain organization can yield important insight into architectural experience that would be very tough to get to in any other way. I think that the design of the visual system is an excellent example of this. The way that vision works is markedly at odds with the way that we think about vision in the everyday sense (8). Understanding how biological vision works advances our understanding of architectural experience, without question.

The other reason for writing about work in this area is to make the fascinating and sometimes forgotten point that, in science, what we (think we) understand is very much conditioned by the tools that we bring to bear on a problem. As an outside observer, it’s easy to lose sight of that and to imagine that science brings us some unvarnished, unmediated version of The Truth. That’s something that science is not equipped to do no matter what the tool of the day might be.

Footnotes

(1) The jury is still out on how significant this scandal is for Alzheimer’s research in general, but it does seem fairly solid that some key papers describing the neuropathology of the disease included some faked data.

(2) Moncrieff has been arguing for some time that the types of medications given for depression rest on some pretty shaky findings. I agree with her to some extent that we don’t really know why anti-depressants work (when they do, which seems to be only sometimes) and that they have been prescribed far too broadly as a simple measure to treat an enormously complex set of disorders.

(3) It’s a common misunderstanding that science progresses in a logical, linear fashion. In some ways, it can be considered to be a kind of method for long-term error correction with the unfortunate side-effect that errors can never be eliminated entirely. Many people have big problems with the essentially probabilistic nature of scientific findings, but those are the rules of the game. What can’t be disputed, though, is that scientific methods have resulted in massive developments in technology and medicine, not to mention a refined understanding of the basic bits of the universe and how they work.

(4) Galvani, in case you haven’t encountered his work before, was the person who used very ingenious methods to demonstrate that the basis of activity in the nervous system was electrical and not pneumatic. Frogs were involved.

(5) I confess that I just spent a good hour staring off into space trying to think of such examples but without success. My wife, who knows everything, pointed out that this is because I need more rest. And then she quickly came up with the example of the serotonin hypothesis for me, again just proving that she really does know everything.

(6) On the positive side of the balance sheet, vision outside the fovea makes up for its lack of precision with a surfeit of sensitivity. That’s why when looking up at the sky on a clear, dark night you can see many more stars with your peripheral vision than you can with your fovea.

(7) When someone like me uses that word “we” that is most often code-language for “my grad student did the hard graft and I’m the one talking about it.” In this case, that grad student is an exceptional researcher named Jatheesh Srikantharajah.

(8) It still blows my mind when I explain every year to beginning students of vision that the feeling we have that our entire visual world is perpetually available in panoramic, full-coloured three dimensions is largely illusory. Our visual worlds are put together one tiny snapshot at a time by machinery that knows how to make a convincing montage.

What I’m reading

It’s been a busy book week or two for me. On the fiction front, I’ve just finished a book called A Waiter in Paris, by Edward Chisholm. It’s a pretty interesting autobiographical account of a young American man exploring the seamy underbelly of the Parisian restaurant world. It’s no Kitchen Confidential but was still a fun, fast read.

On the edifying non-fiction front, and in an effort to advance a long-form project I’m struggling with, I re-read Jane Jacobs’ Dark Age Ahead. It seems apt for the times and is beautifully written but I felt the same way after second reading as I did after the first time. Jacobs picked a few reasons to worry about our future, and she wasn’t wrong, but she focused on their implications for how we design cities. Not at all surprising considering the woman’s expertise, but I yearned for a little more. Still, as with all of her writing, astonishing prescience. I’m also currently reading Neil Postman’s The End of Education. Postman is one of those writers who makes me want to give up. He makes himself so clear and precise with every sentence that I couldn’t imagine writing anything that would be half-worth reading. The main premise of the book is that almost all discussion of education is focused on how and almost none of it is about why.

It’s a great book to read as I approach a new teaching term in my day job.

What I’m eating

I’ve scoured my phone for images of interesting food I’ve eaten recently and I’ve drawn a blank. So here I’ll just leave a promissory note and say that after I get up from the keyboard I’m going to go down to the kitchen and attempt to make a baked Alaska (nothing to do with Sarah Palin….). I’ve never done this before. Pictures next week whether it works out or not. If I have a kitchen on fire with an overcooked meringue, that’s what you’ll see.

Your best post yet! Bravo, bravo. Really meaty, lots to think about. The weaving of points about how technology shapes and restricts research agendas with points about peripheral/foveal vision . . . well done. I remain skeptical about the whole "architectural atmospheres" argument, but you've at least convinced me to reconsider. I'm going to spend the next week trying to look at the world through primarily peripheral vision.